Feb 19, 2007

Some notable moments in recorded life

Recent progresses in miniaturization and storage capability have made it possible to record, access, retrieve, and potential sharing, all the generated information of a user's or object's life experience.

Two of the most important projects in this area are Lifelogs (initially funded by DARPA, then killed by the Pentagon in 2004) and Microsoft MyLifeBits

I am fashinated by how these new technologies could radically change psychotherapy and, more generally, how they could fundamentally affect our life.

In this article entitled On the Record, All the Time, Scott Carlson thaces the story and the implications of the introduction of LifeLogging. In the article I found a list of some notable moments in "recorded life":

1900s: The Brownie camera makes photography available to the masses.

1940: President Franklin D. Roosevelt begins recording press conferences and some meetings.

1945: Vannevar Bush, a prominent American scientist, predicts a time when scientists will be photographing their lab work and storing their correspondence in a machine called a "memex."

1960s: Presidents John F. Kennedy and Lyndon B. Johnson record meetings and phone conversations for posterity, which later provides hundreds of hours of programming for C-Span.

1969: The microcassette goes on the market and becomes the voice-recording medium of choice.

1973: An American Family, documenting the domestic drama of the Louds, is the first reality-TV show.

1973-74: President Richard Nixon releases the Watergate tapesjust some of more than 3,500 hours of conversations that he had recordedwhich leads to his resignation.

Late 1970s: Steve Mann, a professor at the University of Toronto, begins dabbling in wearable computing.

Mid-1980s: Fitness nuts are wearing stretch pants and leggings, along with wristwatch-sized devices that measure heart rate and blood pressure. The heart monitors can cost $200 or more.

1991: The first Webcam goes online.

Mid-1990s: Cellphones, digital cameras, and the Internet become commonplace.

1995: Gordon Bell, a computer engineer and entrepreneur, gets involved with Microsoft Research and begins work that will lead him to record various aspects of his life for the MyLifeBits project.

1999: Microsoft Research invents prototype SenseCams, cameras that hang around the neck and continuously snap pictures.

2000: Scrapbooking has a renaissance, leading to new retail stores devoted to a hobby industry now worth $2-billion.

2003: MySpace debuts. 2004: The Final Cut, starring Robin Williams, describes a future where memories are recorded on implanted chips. The Defense Advanced Research Projects Agency drops a lifelogging project amid a furor over privacy. A workshop on the "Continuous Archival and Retrieval of Personal Experiences" convenes at Columbia University.

2005: YouTube appears.

2006: Nokia releases Lifeblog 2.0, which allows people to upload audio notes, photographs, location information, and other records of life events to a database.

00:25 Posted in Research tools | Permalink | Comments (0) | Tags: future interfaces

Jan 22, 2007

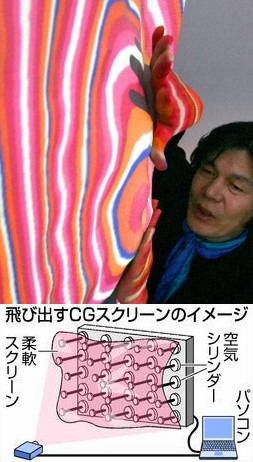

Gemotion screen shows video in living 3D

From Pink Tentacle

Gemotion is a soft, ‘living’ display that bulges and collapses in sync with the graphics on the screen, creating visuals that literally pop out at the viewer.

Yoichiro Kawaguchi, a well-known computer graphics artist and University of Tokyo professor, created Gemotion by arranging 72 air cylinders behind a flexible, 100 x 60 cm (39 x 24 inch) screen. As video is projected onto the screen, image data is relayed to the cylinders, which then push and pull on the screen accordingly.

“If used with games, TV or cinema, the screen could give images an element of power never seen before. It could lead to completely new forms of media,” says Kawaguchi.

The Gemotion screen will be on display from January 21 to February 4 as part of a media art exhibit (called Nihon no hyogen-ryoku) at National Art Center, Tokyo, which recently opened in Roppongi.

22:13 Posted in Future interfaces | Permalink | Comments (0) | Tags: future interfaces

Jan 09, 2007

5 Courts

|

5 Courts is a revolutionary multi-player, multi-site game and arts space played across five cities: York, Leeds, Bradford and Sheffield in October 2006. Players use their own bodies to send balls of projected light across the playing space, aiming for goals representing the other cities. Entirely interactive, it's a competition to see which city has the least light balls in their square when the time runs out. Designed to be aesthetically beautiful and great fun to play and watch, games are a minute long and run throughout the night. 5 Courts was conceived, designed and programmed by digital media artists KMA (Kit Monkman & Tom Wexler). The piece was commissioned by Illuminate as part of the Light Night festival. [blogged by Martin Rieser on Mobile Audience] |

22:55 Posted in Cyberart | Permalink | Comments (0) | Tags: future interfaces

Dec 14, 2006

reacTable

23:54 Posted in Future interfaces | Permalink | Comments (0) | Tags: future interfaces

Dec 06, 2006

Pocket Projectors

Via KurzweilAI.net

The Microvision system, composed of semiconductor lasers and a tiny mirror, will be small enough to integrate projection technology into a phone or an iPod.

18:39 Posted in Future interfaces | Permalink | Comments (0) | Tags: future interfaces

Nov 07, 2006

The future of music experience

check out this youtube video showing "reactable", an amazing music instrument with a tangible interface

23:00 Posted in Future interfaces | Permalink | Comments (0) | Tags: future interfaces

Oct 30, 2006

Throwable game-controllers

Re-blogged from New Scientist Tech

"Just what the doctor ordered? A new breed of throwable games controllers could turn computer gaming into a healthy pastime, reckons one Californian inventor. His "tossable peripherals" aim to get lazy console gamers up off the couch and out into the fresh air.

Each controller resembles a normal throwable object, like a beach ball, a football or a Frisbee. But they also connect via WiFi to a games console, like the PlayStation Portable. And each also contains an accelerometer capable of detecting speed and impact, an altimeter, a timer and a GPS receiver.

The connected console can then orchestrate a game of catch, awarding points for a good catch or deducting them if the peripheral is dropped hard on the ground. Or perhaps the challenge could be to can throw the object furthest, highest or fastest, with the connected computer keeping track of different competitors' scores.

Hardcore gamers, who cannot bear to be separated from a computer screen, could wear a head-mounted display that shows scores and other information. The peripheral can also emit a bleeps when it has been still for too long, to help the owner locate it in the long grass"

Read the full throwable game controller patent application

15:30 Posted in Future interfaces | Permalink | Comments (0) | Tags: future interfaces

Oct 27, 2006

The science of the invisible

From CBC News

British and U.S. researchers have developed a cloak that renders the wearer invisible. The shield, a set of metamaterial concentric rings, can redirect microwave beams so they flow around a “hidden” object inside. The cloak is designed for operation over a band of microwave frequencies, and works only in two dimensions.

Metamaterial Electromagnetic Cloak at Microwave Frequencies.

Authors: D. Schurig, J.J. Mock, B.J. Justice, S.A. Cummer, J.B. Pendry, A.F. Starr, D.R. Smith.

Science DOI: 10.1126/science.1133628

Recently published theory has suggested that a cloak of invisibility is in principle possible, at least over a narrow frequency band. We present here the first practical realization of such a cloak: in our demonstration, a copper cylinder is 'hidden' inside a cloak constructed according to the previous theoretical prescription. The cloak is constructed using artificially structured metamaterials, designed for operation over a band of microwave frequencies. The cloak decreases scattering from the hidden object whilst at the same time reducing its shadow, so that the cloak and object combined begin to resemble free space.

10:33 | Permalink | Comments (0) | Tags: future interfaces

Oct 26, 2006

DNA-based switch will allow interfacing organisms with computers

Re-blogged from Kurzweil.net

Researchers at the University of Portsmouth have developed an electronic switch based on DNA, a world-first bionanotechnology breakthrough that provides the foundation for the interface between living organisms and the computer world...

Read the full story here

19:37 Posted in Future interfaces | Permalink | Comments (0) | Tags: future interfaces

Oct 16, 2006

I/O Plant

The always interesting Mauro Cherubini's moleskin has a post about a tool for designing a content that utilize plants as an input-output interface. Dubbed I/O Plant, the system allows to connect actuators, sensors and database servers to living plants, making them a part of an electric circuit or a network terminal.

22:55 Posted in Future interfaces | Permalink | Comments (0) | Tags: future interfaces

Sep 24, 2006

SmartRetina used as a navigating tool in Google earth

Via Nastypixel

SmartRetina is a system that allows to capture the user’s hand gestures and recognize them as computer actions.

22:51 Posted in Future interfaces | Permalink | Comments (0) | Tags: future interfaces

Aug 04, 2006

Tablescape Plus: Upstanding Tiny Displays on Tabletop Displays

Placing physical objects on a tabletop display is common for intuitive tangible input. The overall goal of our project is to increase the possibility of the interactive physical objects. By utilizing the tabletop objects as projection screens as well as input equipment, we can change the appearance and role of each object easily. To achieve this goal, we propose a novel tablescape display system, "Tabletop Plus." Tabletop Plus can project separate images on the tabletop horizontal screen and on vertically placed objects simlutaneously. No special electronic devices are installed on these objects. Instead, we attached a paper marker underneath these objests for vision-based recogniton. Projected images change according to the angle, position and ID of each placed object. In addition, the displayed images are not occluded by users' hands since all equipment is installed inside the table.

Example application :: Tabletop Theater (pictured above): When you put a tiny display on a tabletop miniature park, an animated character appears and moves according to the position and direction of it. In addition, the user can change the actions of the characters by their positional relationships.

17:21 Posted in Future interfaces | Permalink | Comments (0) | Tags: future interfaces

Jul 18, 2006

"TV for the brain" patented by Sony

00:21 Posted in Future interfaces | Permalink | Comments (0) | Tags: future interfaces

Jul 05, 2006

Hearing with Your Eyes

Via Medgadget

Manabe Hiroyuki has developed a machine that could eventually allow you to control things in your environment simply by looking at it. From the abstract:

A headphone-type gaze detector for a full-time wearable interface is proposed. It uses a Kalman filter to analyze multiple channels of EOG signals measured at the locations of headphone cushions to estimate gaze direction. Evaluations show that the average estimation error is 4.4® (horizontal) and 8.3® (vertical), and that the drift is suppressed to the same level as in ordinary EOG. The method is especially robust against signal anomalies. Selecting a real object from among many surrounding ones is one possible application of this headphone gaze detector. Soon we'll be able to turn on the Barry Manilow and turn down the lights with a simple sexy stare.

23:17 Posted in Future interfaces | Permalink | Comments (0) | Tags: future interfaces

Jun 04, 2006

Interface and Society

Re-blogged from Networked Performance

Interface and Society: Deadline call for works: July 1 - see call; Public Private Interface workshop: June 10-13; Mobile troops workshop: September 13-16; Conference: November 10-11 2006; Exhibition opening and performance: November 10, 2006.

In our everyday life we constantly have to cope more or less successfully with interfaces. We use the mobile phone, the mp3 player, and our laptop, in order to gain access to the digital part of our life. In recent years this situation has lead to the creation of new interdisciplinary subjects like "Interaction Design" or "Physical Computing".

We live between two worlds, our physical environment and the digital space. Technology and its digital space are our second nature and the interfaces are our points of access to this technosphere.

Since artists started working with technology they have been developing interfaces and modes of interaction. The interface itself became an artistic thematic.

The project INTERFACE and SOCIETY investigates how artists deal with the transformation of our everyday life through technical interfaces. With the rapid technological development a thoroughly critique of the interface towards society is necessary.

The role of the artist is thereby crucial. S/he has the freedom to deal with technologies and interfaces beyond functionality and usability. The project INTERFACE and SOCIETY is looking at this development with a special focus on the artistic contribution.

INTERFACE and SOCIETY is an umbrella for a range of activities throughout 2006 at Ateleir Nord in Oslo.

18:33 Posted in Future interfaces | Permalink | Comments (0) | Tags: future interfaces

Sep 15, 2005

ICare Haptic Interface for the blind

Via the Presence-L Listserv

(from the iCare project web site)

iCare Haptic Interface will allow individuals who are blind to explore objects using their hands. Their hand movements will be captured through the Datagloves and spatial features of the object will be captured through the video cameras. The system will find correlations between spatial features, hand movements and haptic sensations for a given object. In the test phase, when the object is detected by the camera, the system will inform the user of the presence of the object by generating characteristic haptic feedback. I Care Haptic Interface will be an interactive display where users can seek information from the system, manipulate virtual objects to actively explore them and recognize the objects.

The system will find correlations between spatial features, hand movements and haptic sensations for a given object. In the test phase, when the object is detected by the camera, the system will inform the user of the presence of the object by generating characteristic haptic feedback. I Care Haptic Interface will be an interactive display where users can seek information from the system, manipulate virtual objects to actively explore them and recognize the objects.

More to explore

15:35 Posted in Future interfaces | Permalink | Comments (0) | Tags: Positive Technology, Future interfaces

Sep 05, 2005

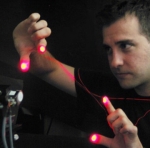

Laser-based tracking for HCI

Via FutureFeeder

Smart Laser Scanner is a high resolution human interfaces that tracks one’s bare fingers as it moves through space in 3Ds. Developed by Alvaro Cassinelli, Stephane Perrin & Masatoshi Ishikawa, the system uses a laser, an array of moveable micro-mirrors, a photodector (an instrument that detects light), and special software written by the team to collect finger-motion data.  The laser sends a beam of light to the array of micro-mirrors, which redirect the laser beam to shine on the finger tip. A photodector senses light reflected off the finger and works with the software to minutely and quickly adjust the micro-mirrors so that they constantly beam the laser light onto the fingertip. According to Cassinelli and his co-workers, the system's components could be eventually integrated into portable devices.

The laser sends a beam of light to the array of micro-mirrors, which redirect the laser beam to shine on the finger tip. A photodector senses light reflected off the finger and works with the software to minutely and quickly adjust the micro-mirrors so that they constantly beam the laser light onto the fingertip. According to Cassinelli and his co-workers, the system's components could be eventually integrated into portable devices.

More to explore

The Smart Laser Scanner website (demo videos available for download)

15:25 Posted in Future interfaces | Permalink | Comments (0) | Tags: Positive Technology, Future interfaces